What Is LDAP and Active Directory?

Lightweight Directory Access Protocol (LDAP) and Active Directory (AD) are core to Identity and Access Management (IAM). Both are legacy methods that have been in use since the mid-1990s. And both continue to be popular today. While AD and LDAP mean two distinctly different things, some people use these terms interchangeably.

Lightweight Directory Access Protocol (LDAP)

So, what is LDAP? Lightweight Directory Access Protocol is an open, platform-independent protocol used to access and maintain directory services over a TCP/IP network. It’s considered lightweight because LDAP is a pared-down version of an older X.500 network directory services standard called Directory Access Protocol (DAP).

LDAP provides a framework for organizing data within a directory. It also standardizes how users, devices, and clients communicate with a directory server. Because LDAP is optimized for speed, it excels at searching through massive volumes of data quickly. LDAP’s scalability makes it an ideal solution for large enterprises that need to authenticate users on platforms with thousands—or even millions—of users.

Active Directory (AD)

Active Directory (AD) is Microsoft’s proprietary directory service for Windows domain networks. It comprises a database (called a directory) and various services, all of which work together to authenticate and authorize users. The directory contains data that identifies users—for example, their names, phone numbers, and login credentials. It also contains information about devices and other network assets.

AD simplifies IAM by storing information about users, devices, and other resources in a central location. Organizations can enable single sign-on (SSO) to allow users to access multiple resources within a domain using one set of login credentials. AD checks to ensure that users are who they claim to be (authentication) and grants access to resources based on each individual user’s permissions (authorization).

LDAP vs. Active Directory: What’s the Difference?

The difference between LDAP and Active Directory is that LDAP is a standard application protocol, while AD is a proprietary product. LDAP is an interface for communicating with directory services, such as AD. In contrast, AD provides a database and services for identity and access management (IAM).

LDAP communicates with directories using a LDAP server. Some organizations use LDAP servers to store identity data for authenticating users to an application. Because AD is also used to store identity data, people sometimes confuse the two methods or conflate them as “LDAP Active Directory” or “Active Directory LDAP.” The fact that AD and LDAP work together adds to the confusion that leads people to think of Active Directory as LDAP.

Similarities Between LDAP and Active Directory

Active Directory is a Microsoft application that stores information about users and devices in a centralized, hierarchical database. AD provides a powerful identity and access management solution. Enterprises use AD to authenticate users to access on-prem resources with a single set of login credentials.

Applications typically use the LDAP protocol to query and communicate with directory services. However, when it’s used in combination with Active Directory, LDAP can also perform authentication. It does this by binding to the database. During binding, the LDAP server authenticates a user and grants access to resources based on that user’s privileges.

While Microsoft uses the more advanced Kerberos protocol as its default authentication method, AD offers organizations the option to implement LDAP instead. LDAP provides a fast and easy method of authentication. It simply verifies the user’s login credentials against the information stored in the AD database. If they match, LDAP grants the user access.

LDAP and Active Directory Advantages and Disadvantages

LDAP and Active Directory have their respective strengths and weaknesses. Evaluating the pros and cons of LDAP vs. Active Directory can help organizations gain a clearer understanding of LDAP vs. AD.

Advantages

These are the main benefits of using LDAP:

- It is widely supported across many industries.

- It is a standardized, ratified protocol.

- It is available as open-source software and has a very flexible architecture.

- It is lightweight, fast, and highly scalable.

Active Directory also offers many benefits, including

- It is highly customizable, making it easy to use, manage, and control access.

- It leverages trust tiers and extensive group policies to provide stronger security than other directory services.

- It includes compliance management features, such as data encryption and auditing.

- Different versions exist for different needs, including federation services and cloud security.

Disadvantages

As a legacy technology, LDAP has a few downsides. Organizations can find these challenges difficult to overcome:

- Its age: LDAP was developed during the early days of the internet.

- It is not well-suited for cloud and web-based applications.

- Setup and maintenance can be very challenging and usually require an expert.

Active Directory has several drawbacks, too. Here are some disadvantages to consider:

- It only runs in Windows environments.

- Because AD manages the network, the entire network will go down if AD fails.

- Setup and maintenance costs can be high.

- Legacy AD is limiting because it requires on-prem infrastructure.

LDAP and Active Directory Use Cases

So, is there a difference between AD and LDAP when it comes to use cases? Yes, indeed.

LDAP was originally developed for Linux and UNIX environments, but today it works with a wide range of applications and operating systems. Examples of popular applications that support LDAP authentication include OpenVPN, Docker, Jenkins, and Kubernetes. One of the most common use cases for LDAP is as a tool for querying, maintaining, and authenticating access to Active Directory.

In contrast, Active Directory is less flexible than LDAP because it only operates in Microsoft environments. AD excels at managing Windows clients and servers and works well with other Microsoft products, such as SharePoint and Exchange. Because AD and domain-joined Windows devices are tightly integrated, Active Directory is more secure than LDAP.

LDAP or Active Directory–Which One Should You Choose?

LDAP’s speed and scalability make it the better option for large applications that need to authenticate vast numbers of users. Examples of organizations that might benefit from LDAP include companies in the airline industry or wireless telecommunications providers that handle millions of subscriber queries.

Microsoft Active Directory is the most widely used directory service for enterprises. It is a good solution for highly structured organizations, such as large commercial banks and government agencies, which prioritize security and compliance. The typical AD customer relies primarily on Windows-based architecture instead of using cloud-, web-, or Linux-based applications.

Because AD was designed for a traditional, on-prem environment with a clearly defined perimeter, there’s no simple way to move it to the cloud. Likewise, because of its age and on-prem limitations, legacy LDAP isn’t a good option for cloud or hybrid environments, either.

How StrongDM Can Help with LDAP and Active Directory

Both LDAP and Active Directory require an identity provider to enable SSO authentication. This makes it important to choose a flexible infrastructure access platform (IAP) that verifies authentication and uses identity based authorization with. integration capabilities.

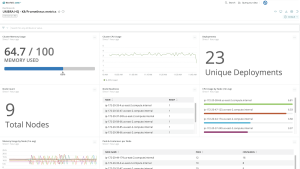

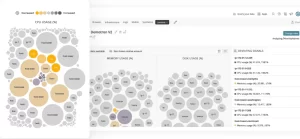

With StrongDM, you can manage identity data and control privileged access to business-critical network resources using AD or another directory service. StrongDM supports all standard protocols, including LDAP. This makes it easy to provide frictionless, secure access to applications, databases, and other network resources.

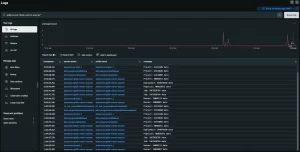

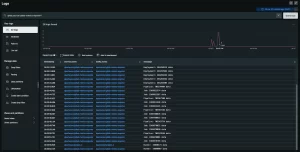

In addition, StrongDM provides deep visibility into user activity, allowing security teams to monitor the entire network in real time. IT administrators can onboard or offboard users, leverage granular controls to manage access, and perform system auditing—all from a single control plane.

Simplify and Strengthen Access Management with StrongDM

As you have seen, there’s a big difference between LDAP and Active Directory. Both have unique use cases. Regardless of whether you use Active Directory vs. LDAP separately or together, both approaches can provide secure authorization and authentication.

StrongDM’s platform is compatible with LDAP, AD, and other popular access management methods. That makes StrongDM a smart choice for enterprises that require reliable connectivity, extensive visibility, and secure access management.

Want to see how StrongDM can streamline user provisioning? Book a demo of StrongDM today.